As an example of a problem that cannot be properly overcome within the current system I provide the mundane problem of selecting the proper soap to wash one's hands with.

While there are many variations of ingredients for soaps, most additions remain in the final product unaltered and do not affect the cleaning ability of the soap. The ingredients that do however, are either glycerol or glycerin, which are hydrolyzed into two types of soap:

The task faced by the soap manufacturer here is thus, properly selecting either or finding a correct ratio for mixing both, to balance cleaning power with irritation prevention. The problem here is that since we are all different people, our soap usage habits differ, it is impossible for a manufacturer to find the optimal balance for all of us. To help with this issue, our system compensates by offering us different brands of soap, where each manufacturer attempts to find it's own optimum and the ones that fail are no longer in business. However this solution is obviously lacking since it not only means that each soap user in the world today has no way of knowing which soap is optimal for him or her, but also makes it very likely that the manufacturers will aim towards keeping the ratios optimal for the average user, rather than provide a variety to suit every user's optimum.

This is a reasonable problem to be approached by information technology, since it is clear that if only the manufacturers would have known the habits of the soap users, they could have quickly found the optimum ratio between glycerol and glycerin based soaps. However as soon as one approaches this problem, a number of secondary problems occur:

In a Technate, the pursuit of profit is no longer key, however there still is variety: There may be several soap making organizations making soap independently of each-other with varying popularity. Since market share is irrelevant in a system where you get to do what you want to do regardless, the organizations would very likely openly share their soap recipes. With the proper research being done by independent researchers, calibration curves could be calculated to apply for all hand washing frequencies. Software could easily be designed, which would let the individual soap users calculate which soap brand is best for them, with the information on the frequency of their soap use never leaving their home and thus maintaining privacy.

The solution described in the previous part is highly specific and cannot be used to solve most problems. It also assumes that the structure of manufacturers, consumers and independent researches will exist, simply due to the nature of the Technate. It is thus important to recognize that in the world as it is today, these independent groups not only already exist, but also already function in this particular way, although to a limited extent.

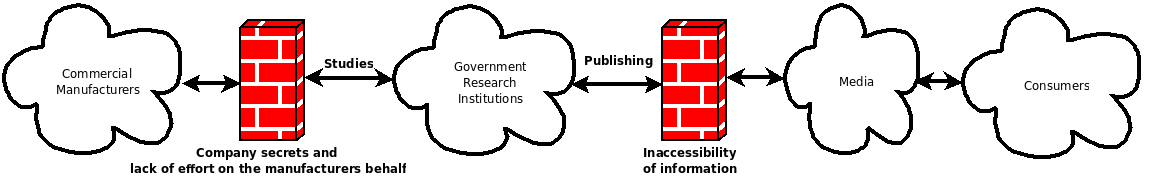

While the basic theory surrounding commercial manufacturers, consumers and government research institutions is exactly what we need to organize the aforementioned solution, the reality is that information exchange between the groups is usually obstructed and is limited at best. Collecting the necessary information is typically requires an effort on the manufacturers behalf and in commerce, effort is money, possibly money they don't want to spend. Furthermore, there is usually no mechanism between the government research institutions and the consumers / media that inform the consumers, to ensure that they know where to look for the information they require, often the research does not provide conclusions that the consumer could use.

These problems can be resolved with technology. In fact currently, the problem of inaccessibility of research material to media and the consumers is being solved using the Internet. Government research institutions have created web-pages on which they publish the results of their studies or at least information on where to find this data using computerized library systems. The media have become peers in this system, where their job has now shifted from publishing into processing of the data they obtain to make it easier to understand. In truth, we are not quite there yet, but this is the direction in which we are going right now. Please note that the manufacturers are not part of this improovement process, and the old barriers that made it difficult for researchers to work with them, still persist due to the same financial issues.

While the manufacturer's unwillingness to share information is also partially based on the company secrets they need to keep in order to prevent others from stealing their market share, the majority of the information that manufacturers refuse to offer represents information that they are not willing to spend money on publishing. This is a reasonable reservation, as most companies fight day to day for survival and cannot afford the investment of time for this type of charity. However, by creating software which offers this information readily and can be linked into the Internet at a minimal investment off effort and no explicit cost, it should be much easier to convince the Manufacturers to publish this information. This way, all obstacles are removed and accurate data can be accessed by the researchers, the media or the consumers at any time.

To enable the aforementioned software package to seamlessly offer information over the Internet the communication standards, Ethernet, IP (Internet Protocol), TCP (Transmission Control Protocol), HTTP (Hyper Text Transfer Protocol), XML (eXtensible Markup Language) and RDF (Resource Description Framework) are chosen. Due to the standards interdependence and for convenience, the phrase "XML over HTTP" will be used in this article, to refer to all the here mentioned standards used together.

Ethernet is a physical layer standard that allows connecting compatible devices into a tree structure that is associated with the Internet today.[11] Ethernet interface chips are easily available on the industrial market and while they are not ideal, represent a low-cost, highly compatible solution that can be connected directly to the Internet with no additional programming or hardware design required. TCP and IP are Internet. The communication standard allows sending data streams, with automatic error correction[12], from device to device over any number of intermediate devices that serve solely to assist transmission[13]. HTTP is the protocol in use to transmit files over TCP/IP. While originally designed for HTML (Hyper Text Markup Language), it can also be used to transfer XML. It supports compression, authentication and other features, that may be dynamically negotiated between both endpoints[14].

All four standards are chosen due to their wide support on the Internet and their suitability for this kind of purpose.

XML is a somewhat vague, but highly interoperable standard for offering data. The advantage of offering data in XML is that little or no human interaction is required in order to make the data readable to software. This implies less time spent working with the data, near real-time access and lower costs.

Because XML does not define the exact formatting for the data provided in this way, it is possible to store any type of data in this way. However this also means that data of the same type offered from two different sources is not necessarily formatted the same way[15]. Fortunately a wide variety of software already exists which can help in translating between XML formats, such as XSLT (Extensible Stylesheet Language Transformation) processing applications[16]. XML format is also neutral in that it is a common standard that is widely supported, yet not owned or controlled by any particular corporation.

RDF is chosen because of it's suitability for this purpose. In RDF, the data provided is organized into properties with values and the XML format is given a name ("namespace"). The properties and values quality helps in making the data non-ambiguous and easier to read by the software. Any set of property names and value types chosen in this way can also be given a name ("namespace") by anyone and the name may be associated with an existing Internet address (as it is an URL)[17]. By giving the set of property names a common name, it becomes possible to avoid unnecessary human assistance when figuring out what file should be used to translate between the different XML formats.

Examples of data sources that are already provided in XML over HTTP include:

Examples of data sources that could / should be providing data in XML over HTTP include:

There are many more of these sources. If many were made available on XML over HTTP, the consumers and the media would no longer have to speculate on these numbers, but rather have access to direct, always accurate values, with the help of researchers.

For the widest commercial manufacturing industry to begin to use software offering XML over HTTP, no single effort would suffice, as there will always be competition that manufacturers may prefer over the one that utilizes XML over HTTP. Therefore, rather than making a single complete software package that offers XML over HTTP, the effort should be focused towards getting the software making industry to adopt the technology as a good solution to existing problems. By creating applications that utilize XML over HTTP to produce unique results that can most easily be made using the technology, the rest of the software industry will be forced to implement XML over HTTP in their own software in order to be able to compete.

The main advantages of applications that utilize XML over HTTP are:

In the previous parts of this article, the standards in use have been described in great detail. This would lead one to believe that implementing XML over HTTP on an embedded device or similar situation, would be more work than it is worth, however this is not the case.

First of all, embedded hardware that supports Ethernet is easily available in the industry[24]. Furthermore there are complete embedded web server libraries and software packages you can use for free[25]. A full scale HTTP server is not actually required since the XML over HTTP device must simply be able to transmit the data when requested, so a software package capable of responding to the HTTP/1.0 "GET" and "POST" commands would probably suffice.

Second, XML and RDF do not end up to be complicated at all, here is an example of what an XML file outputted by a device might look like:

<?xml version="1.0"?> <data xmlns="http://www.example.com/our/dataFormat"> <entry time="1237766460">1548</entry> <entry time="1237766461">1562</entry> ... <entry time="1237769932">1582</entry> </data>

This data block, when sent over HTTP, would represent a set of values recorded by a device using XML over HTTP, with time stamps in UNIX time format in an XML language owned by Example inc. A program written in assembly that can create and update an XML file like this in i386 Linux, is under 120 instructions.

And finally, parsing this data or converting it into another format is easily done in XSLT, again using existing free software[26]. The XML data can be converted into images[27] or web-pages rather directly and other options are always open.

In order to publish this data online, all that needs to be done is the routing equipment needs to be configured to allow access to the devices from the outside. In case filtering of information is required, a solution is a web-server hosting an XSLT file to collect the information and publish only the selected items on request. A typical company layout could look a little like this:

Where the embedded systems could connect to any existing company network infrastructure. Given the tree structure of the Ethernet, any existing network connection can be expanded to offer enough connectors for all equipment. An existing company server, or a computer set up with Linux if the company does not have one, would be set up to collect the XML data from the embedded devices and process it into graphics, web-pages, etc. These would then be offered to the users on their existing office computers or be offered over the Internet by allowing public access to the appropriate data available on the previously mentioned web server. It is important to mention that this implementation is highly portable and will work on the existing equipment regardless of hardware manufacturer, software installed, etc.

Key to the functionality of the above mentioned layouts is the firmware and software involved. Software connected to your data sources (sensors) will need to acquire the data and offer it using XML over HTTP. The software on your processing device, possibly a webserver, will need to process the available XML data into the end-user interface, or assemble the data to offer it in XML (if you wish to filter it). While software libraries required to make the job as trivial as possible exist and have been mentioned earlier, a software package is under development to offer a real life example of a software package with industrial application, that uses XML over HTTP, and displays all the characteristics one would come to expect of a modern software package of this type.

The software package offers monitoring and control of the parameters inside a bioreactor. Here are the implementation details:

The software package is tested and found able to function as expected.

A solution capable of removing the vast majority of the common information blockades we currently encounter in our lives today is already available. The step to be taken in order to ensure the transition actually takes place is unlikely to happen on it's own. However by implementing some of the solutions ahead of time and exposing the advantages, others will likely follow. It is possible that establishing a non-profit organization that motivates people towards using XML over HTTP and making the data public, may be required in order to attain the desired result.

XML over HTTP is a solution that can give all the researchers, the media and consumers access to accurate, real time information about the world around them. With this information researchers could likely be able to predict and determine behaviors, results and outcomes of the complex systems in which we live today with absolute accuracy, something that is currently deemed impossible as the assumption is made that there is no way one could obtain all this information, reliably in real time. With accurate information available as facts provided by impartial machinery, the media would no longer have to make up stories and wander in the dark about the situation in the world today. Popular issues like global warming, sufficiency of alternative energy sources and environmental contamination would no longer be an issue of debates, but rather a trivial problem of putting together a suitable query for the Internet.

The potential of this technology is great and the technology of the day allows something like this to be done. We need to take the final step.